The VMware Container Service extensions offers a nice integration of Kubernetes into the NSX Advanced Loadbalancer (formerly known as AVI LoadBalancer).

With the follwoing steps, you can create a demo nginx and expose it to the VMware Cloud director external network:

$ kubectl expose deplyoment nginx --type=LoadBalancer --port=80

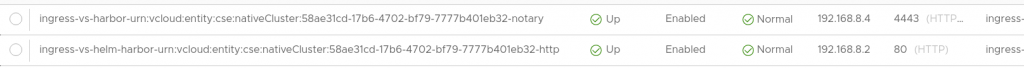

You might have noticed that an internal virtual IP address within the 192.168.8.x range is assigned!

I was quite often asked, if and how you can change the IP address range!

Yes, it is possible to change the IP address range but some Kubernetes magic is needed!

Disclaimer: You are executing the steps described below on your own responsibility!

First of all, you have to backup the original config! If you do not backup your config, there is a high risk of destroying your K8s cluster!

We have to figure out which configmap needs to be backed up. Look out for ccm.

$ kubectl get configmaps -n kube-system NAME DATA AGE antrea-ca 1 27h antrea-config-9c7h568bgf 3 27h cert-manager-cainjector-leader-election 0 26h cert-manager-controller 0 26h coredns 1 27h extension-apiserver-authentication 6 27h kube-proxy 2 27h kube-root-ca.crt 1 27h kubeadm-config 2 27h kubelet-config-1.21 1 27h vcloud-ccm-configmap 1 27h vcloud-csi-configmap 1 27h

You need to backup the vcloud-ccm-configmap!

$ kubectl get configmap vcloud-ccm-configmap -o yaml -n kube-system > ccm-configmap-backup.yaml

As a next and more important step, you have to backup the ccm deployment config.

Use kubectl to figure out which pod needs to be backed up. Typically the pod is deployed in the namespace kube-system. Look out for a pod containing vmware-cloud-director-ccm-*.

$ kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE antrea-agent-4t9wb 2/2 Running 0 27h antrea-agent-5dhz9 2/2 Running 0 27h antrea-agent-tfrqv 2/2 Running 0 27h antrea-controller-5456b989f5-fz45d 1/1 Running 0 27h coredns-76c9c76db4-dz7sl 1/1 Running 0 27h coredns-76c9c76db4-tlggh 1/1 Running 0 27h csi-vcd-controllerplugin-0 3/3 Running 0 27h csi-vcd-nodeplugin-7w9k5 2/2 Running 0 27h csi-vcd-nodeplugin-bppmr 2/2 Running 0 27h etcd-mstr-7byg 1/1 Running 0 27h kube-apiserver-mstr-7byg 1/1 Running 0 27h kube-controller-manager-mstr-7byg 1/1 Running 0 27h kube-proxy-5kk9j 1/1 Running 0 27h kube-proxy-psxlr 1/1 Running 0 27h kube-proxy-sh68t 1/1 Running 0 27h kube-scheduler-mstr-7byg 1/1 Running 0 27h vmware-cloud-director-ccm-669599b5b5-z572s 1/1 Running 0 27h

$ kubectl get pod vmware-cloud-director-ccm-669599b5b5-z572s -n kube-system -o yaml > ccm-deployment-backup.yaml

Copy the ccm-configmap-backup.yaml to antoher file like ccm-configmap-new.yaml. Open the ccm-configmap-new.yaml, you created before, in a text editor like vim. Change the startIP and endIP according to your needs!

apiVersion: v1

data:

vcloud-ccm-config.yaml: |

vcd:

host: "https://vcd.berner.ws"

org: "next-gen"

vdc: "next-gen-ovdc"

vAppName: ClusterAPI-MGMT

network: "next-gen-int"

vipSubnet: ""

loadbalancer:

oneArm:

startIP: "192.168.8.2"

endIP: "192.168.8.100"

ports:

http: 80

https: 443

certAlias: urn:vcloud:entity:cse:nativeCluster:58ae31cd-17b6-4702-bf79-7777b401eb32-cert

clusterid: urn:vcloud:entity:cse:nativeCluster:58ae31cd-17b6-4702-bf79-7777b401eb32

immutable: true

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"vcloud-ccm-config.yaml":"vcd:\n host: \"https://vcd.berner.ws\"\n org: \"next-gen\"\n vdc: \"next-gen-ovdc\"\n vAppName: ClusterAPI-MGMT\n network: \"next-gen-int\"\n vipSubnet: \"\"\nloadbalancer:\n oneArm:\n startIP: \"192.168.8.2\"\n endIP: \"192.168.8.100\"\n ports:\n http: 80\n https: 443\n certAlias: urn:vcloud:entity:cse:nativeCluster:58ae31cd-17b6-4702-bf79-7777b401eb32-cert\nclusterid: urn:vcloud:entity:cse:nativeCluster:58ae31cd-17b6-4702-bf79-7777b401eb32\n"},"immutable":true,"kind":"ConfigMap","metadata":{"annotations":{},"name":"vcloud-ccm-configmap","namespace":"kube-system"}}

creationTimestamp: "2022-01-27T09:46:18Z"

name: vcloud-ccm-configmap

namespace: kube-system

resourceVersion: "440"

uid: db3e8894-5060-44e2-b20f-1eda812f84a4

PLEASE DOUBLE_CHECK that you have backed up your original config before continuing!

After applying the config, the new virtual IP address range is used:

kubectl delete -f ccm-deployment-backup.yaml kubectl delete -f ccm-configmap-backup.yaml kubectl apply -f ccm-configmap-new.yaml kubectl apply -f ccm-deployment-backup.yaml